An A-Z Outline for Latent Dirichlet Allocation (LDA) in Topic Modeling

Latent Dirichlet Allocation (LDA) – An Overview

In the present-day Information age, as Data Analytics becomes a potential source for framing effective business strategies, we need tools, techniques, and applications that could enable us performance analytics better and faster.

Topic modeling has emerged as one such effective tool for the analysis of text and content. Topic modeling works by identifying multiple topics from huge piles of information – whether assorted, unorganized or messed up. So, within that, there are a number of approaches that help. Latent Dirichlet Allocation (LDA) is one such prominent approach that can magnificently perform topic modeling for enterprises and marketers — empowering them effectively save time and derive insights.

What is LDA?

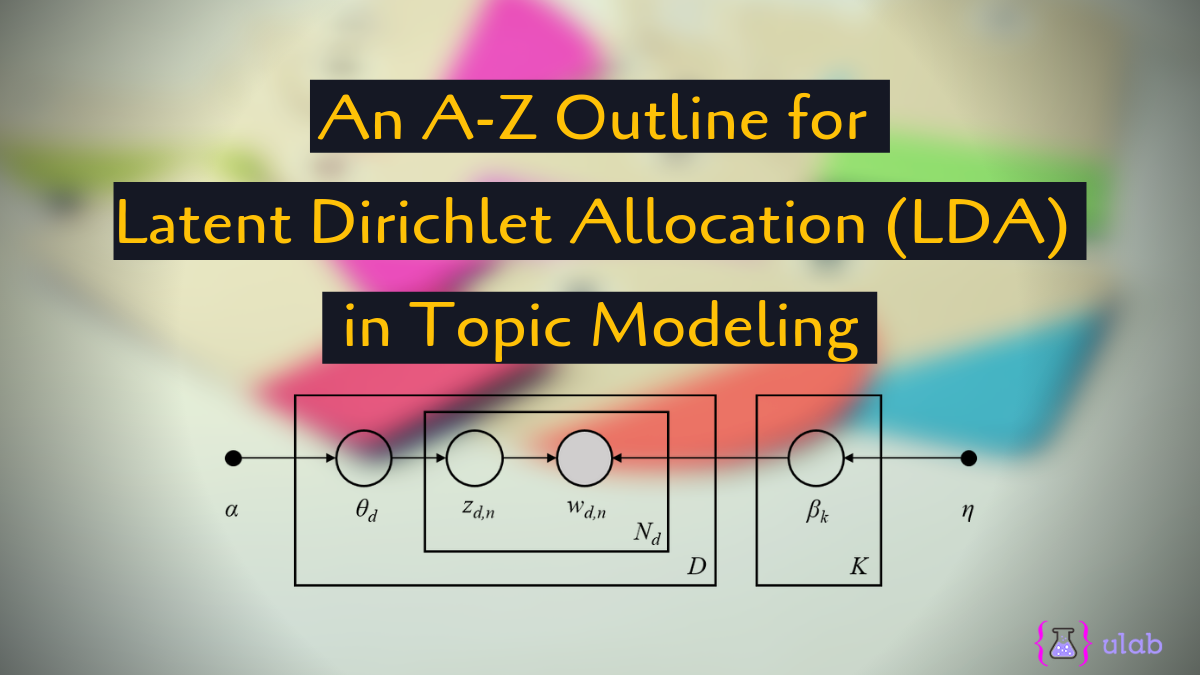

A popular yet simplistic form of Topic Modelling, the Latent Dirichlet Allocation is about a recurrent pattern seen in co-occurring terms within a corpus. For instance, anything relating to the same subject will come under the same category. If you talk of dairy, animals, grass, and farm. These words will all come under the same corpus and be automatically be re-arranged. LDA works by generating topics based on the frequency of words.

How LDA works?

It works effectively by generating data sets from the piles. From a given mixture of topics in a data set or document, LDA efficiently classifies the words of similar meaning or context. It works through various packages formed on basis of Python programming language.

Which all Frameworks or Packages does LDA Support?

It supports the Gensim, NLTK and stop_words packages in Python. Let us learn about them one by one:

NLTK

NLTK works through the Natural Language Processing (NLP) toolkit. It effectively uses an unsupervised machine learning method to identify the guiding semantic structures within a document. This model can be smartly used for analysis of any form of content within a document – whether they include tags, posts, or even web copy.

Gensim

This one is a multicore machine model offering a faster implementation of new unseen documents or less explored documents. This can be used for online training through updated documents. The algorithm is Gensim-based LDA topic modeling is mostly streamed for training documents without entailing any random access. It runs quite effectively using a constant memory without affecting the size of memory footprint or RAM.

Stop_Words

This process entails the tokenization of stop words in a data set. It is usually removed from the NLTK framework. It works through the NLP model as well.

How to Import and Cleanse your Documents in LDA?

In order to generate an effective topic model, data importing and clean-up is critical. This step serves as a prelude to topic modeling. Often also called garbage and garbage out step, this step ensures you get the most relevant text in for the modeling process. It entails the steps of tokenization, Stopping and Stemming of the words.

Tokenization is about creating segments of the content or text in a way that they return to their atomic elements. There are various ways tokenization can be done. It works effectively on words that do not use apostrophize or hyphens. It may confuse in words that combine two such as “isn’t”. In such cases, you might need to add a loop.

Stop Words is a method used for removing the connectors and conjunctions in a given sentence. These stop words must be removed from the tokens. It is important to remove connections of less meaningful words such as “the”, “who”, etc from the tokens to avoid cluttering.

Stemming comes as the next filter in the direction to remove the repetition of words that have topically the same meaning. This step is critical for stopping the data sets from becoming too verbose. This algorithm is one of the most significant in a given LDA model because it works as an intelligent automation within tokenization.

How to Shift to Document-Term Matrices?

Now all your tokenization, stemming and stop wordage comes to an actual use where you create a term matrix. At this stage, your relevant and filtered text converts into a significant and relevant set of lists that are ready to ooze out multiple topics. The actual usage of the LDA technique comes into play here. The document-term matrix serves as the basis for the actual application of the LDA model.

It works the best within the gensim documentation parameter. It specifies how many topics a user wishes to extract or generate at a given point in time. It also ensures accuracy and cuts down any redundancy. It also checks how many times the laps occur in a model within the corpus. The higher the number of passes, the more precise will the model becomes. When the corpus is large there could be a sloth in the passes.

Final Review of Results or Topics Extracted

The topics are obtained through the Document Term Matrix and then comes this stage where the user can examine the topics. The topics are generated in a fashion or sequence and separated automatically through commas. Each topic generally contains three to four words. As a user, you may still have to make sense of the words used in a single topic. They may not have connectors and hence, may not grammatically form a sense. For instance, the topic makes look like Baby mother love milk. Now, these serve as the hint words for you to derive multiple topics. Such topics form great insights when you try to find patterns through customer reviews or survey results. You can potentially save a lot of time by automating the topic modeling.

The higher the number of topics extracted, the more effective the results seem. However, it also depends on the size of your data input. Taking up an abnormally high volume of data in a single shot may again not be advisable.

The Top Significance of the LDA Model

LDA works through a plain logic that every source document discussed multiple topics and its job is to separate out the topics in clusters. The separation has to be done on the basis of certain logic. It works by looking at the repetition of certain words and the number of repetitions.

References for Further Reading

Articles:

Topic Modeling in Python with NLTK and Gensim

Guides and Tutorials:

Models.Idamodel – Latent Dirichlet Allocation

Sklearn.decomposition.Latent Dirichlet Allocation

EBooks:

Literary Analysis with NLP: LDA Topic Modeling

Conclusion

LDA serves as one of the better topic modeling techniques and effectively supports most packages in Python. It is adaptable and simplistic and hence, the favorite of engineers. It has the capability to easily generate more than 5 topics in a single go.

The challenges associated with LDA are its extreme dependability on tokenization and pre-production techniques of labeling, stop words, etc. So, it expects the user to create some segregated lists initially. This sometimes adds up tediousness for the user.

If you would like to add anything, your comments and suggestions are welcome. If you have questions, rather, we’d be glad to address them. Connect with us through the comments section below.